And then bring him back as another actor. Roddenberry's ashes must be turning in their orbit as this dull, cheap, poorly edited (watching it without advert breaks really brings this home) trudging Trabant of a show lumbers into space. The makers of Earth KNOW it's rubbish as they have to always say \"Gene Roddenberry's Earth.\" otherwise people would not continue watching. within the neural network, eliminating some of the complexity in the RNN model. Their actions and reactions are wooden and predictable, often painful to watch. Learn how recurrent neural networks use sequential data to solve common. It's really difficult to care about the characters here as they are not simply foolish, just missing a spark of life. model torch.nn.Sequential ( torch.nn.LSTM (Din, H), torch.nn. It may treat important issues, yet not as a serious philosophy. It's clichéd and uninspiring.) While US viewers might like emotion and character development, sci-fi is a genre that does not take itself seriously (cf. (LDS), have been the workhorse of sequence modeling in the past. See the Keras RNN API guide for details about the usage of RNN API. State space models (SSMs), such as hidden Markov models (HMM) and linear dynamical systems. (I'm sure there are those of you out there who think Babylon 5 is good sci-fi TV. Long Short-Term Memory layer - Hochreiter 1997. Silly prosthetics, cheap cardboard sets, stilted dialogues, CG that doesn't match the background, and painfully one-dimensional characters cannot be overcome with a 'sci-fi' setting.

#Sequential model lstm tv

I tried to like this, I really did, but it is to good TV sci-fi as Babylon 5 is to Star Trek (the original). Sci-fi movies/TV are usually underfunded, under-appreciated and misunderstood.

#Sequential model lstm free

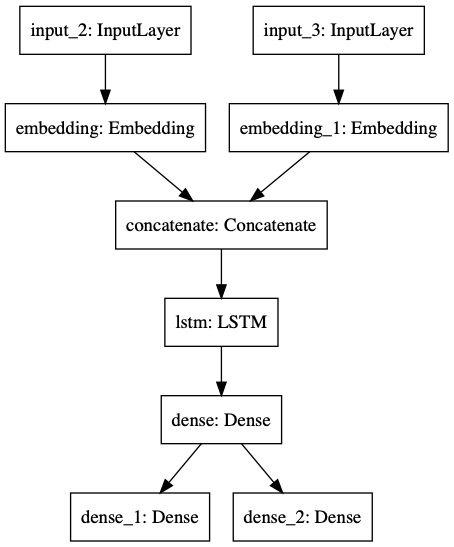

I hope this is enough to clarify, but in case you have more questions, please feel free to ask, since I may have omitted something."text": "I love sci-fi and am willing to put up with a lot. Moreover, you could concatenate two MyMLP in a more complex model definition and retrieve the output of each one in a similar way. My_mlp = MyMLP(in_ch, out_ch_1, out_ch_2) LSTM+TGA: This model uses LSTM with interactive attention mechanism which only considers the intra-sequence irregular temporality item tu,q and t. In your main file you can now declare the custom architecture and use it: from myFile import MyMLP # by returning both x and first_out, you can now access the first layer's output # first_out will now be your output of the first block # you MUST implement a forward(input) method whenever inheriting from nn.Module In problems where all timesteps of the input sequence are available, Bidirectional LSTMs train two instead of one LSTMs on the input sequence. It is a special type of Recurrent Neural Network which is capable of handling the vanishing gradient problem faced by RNN. Self.block2 = nn.Linear(out_channels_1, out_channels_2) Bidirectional LSTMs are an extension of traditional LSTMs that can improve model performance on sequence classification problems. Long Short-Term Memory Networks is a deep learning, sequential neural network that allows information to persist. Self.block1 = nn.Linear(in_channels, out_channels_1) # first of all, calling base class constructor I can build a custom class inheriting from nn.Module: class MyMLP(nn.Module):ĭef _init_(self, in_channels, out_channels_1, out_channels_2): Suppose I want to build a simple two-layer MLP and retrieve the output of each layer. Let's make it more clear with a simple example. That said, you are allowed to concatenate an arbitrary number of modules or blocks within a single architecture, and you can use this property to retrieve the output of a certain layer. Types of Sequence Problems Sequence problems can be broadly categorized into the following categories: One-to-One: Where there is one input and one output. As far as I'm concerned there's nothing like a SplitTable or a SelectTable in PyTorch. Particularly, Long Short Term Memory Network (LSTM), which is a variation of RNN, is currently being used in a variety of domains to solve sequence problems.

0 kommentar(er)

0 kommentar(er)